A Comparison of Neural Networks for Sign Language Recognition with LSA64

Abstract

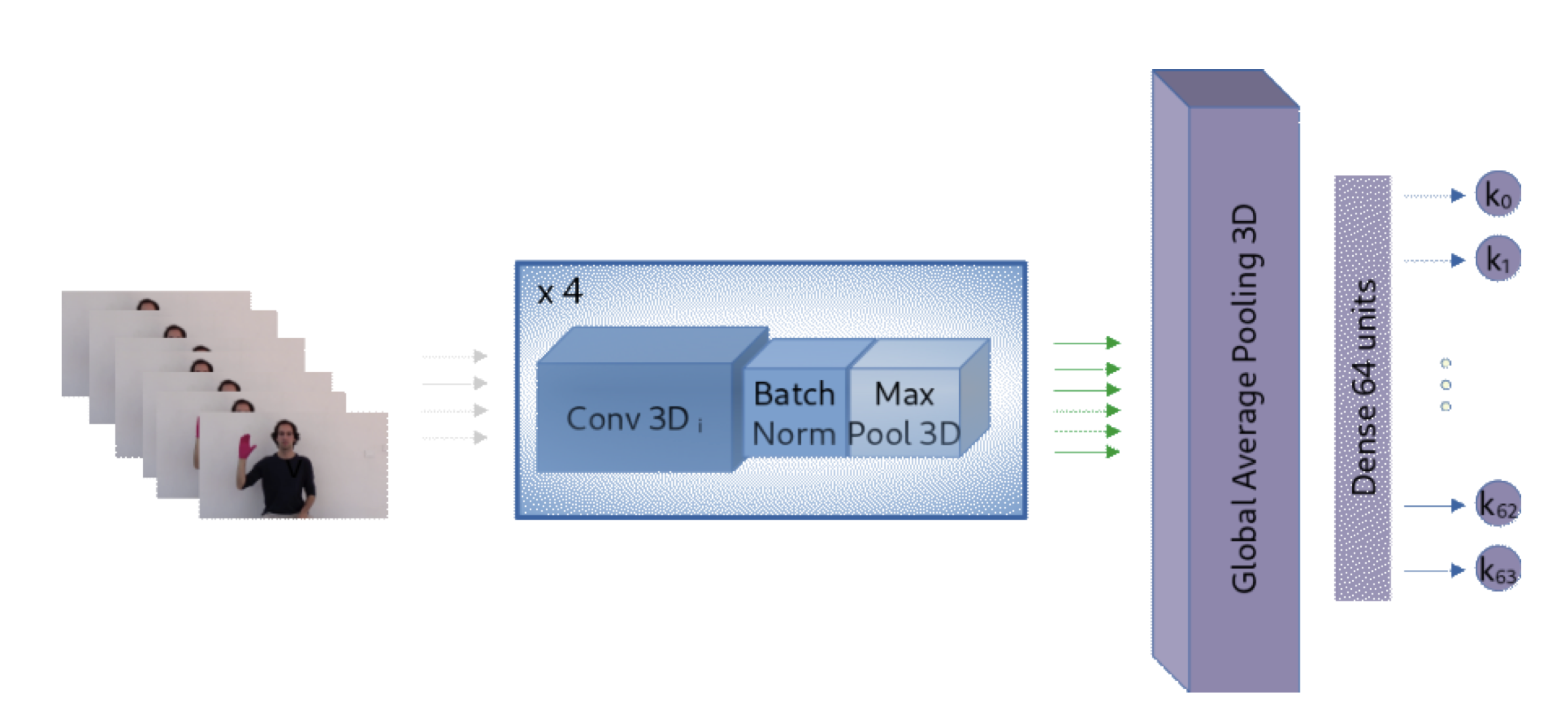

Sign Language Recognition models have been steadily increasing in performance in the last years, fueled by Neural Network models. Furthermore, generic Neural Network models have taken precedence over specialized models designed specifically for Sign Language. Despite this, the completeness and complexity of datasets has not scaled accordingly. This deficiency presents a significant challenge for deploying Sign Language Recognition models, specially given that Sign Languages are specific to countries or even regions. Following this trend, we experiment with three models built on standard recurrent and convolutional neural network layers. We evaluate the models on LSA64, the only Argentinian Sign Language dataset available. Coupled with simple but carefully chosen hyperparameters and preprocessing techniques, these models are all able to achieve near perfect accuracy on LSA64, surpassing all previous models, many specifically designed for this task. Furthermore, we perform ablation studies that indicate that temporal data augmentation can provide a significant boost to accuracy, unlike traditional spatial data augmentation techniques. Finally, we analyze the activation values of the three models to understand the types of features learned, and find they develop on hand-specific filters to classify signs.

Citation

@inproceedings{mindlin2021comparison,

title={A Comparison of Neural Networks for Sign Language Recognition with LSA64},

author={Mindlin, Iv{\'a}n and Quiroga, Facundo and Ronchetti, Franco and Bianco, Pedro Dal and R{\'\i}os, Gast{\'o}n and Lanzarini, Laura and Hasperu{\'e}, Waldo},

booktitle={Conference on Cloud Computing, Big Data \& Emerging Topics},

pages={104--117},

year={2021},

organization={Springer}

}